What Are Guardrails?

Guardrails are protection mechanisms for AI systems that ensure that AI operates within ethical, legal, and technical boundaries. They control what an AI model can and cannot do. Especially with large language models (LLMs), they prevent harmful, risky, or undesirable results.

Guardrails intervene at multiple points in the AI process – from input to output. They control what the AI is allowed to process, how it responds, and what ultimately reaches the user.

Guardrails ensure:

- Secure processing of user input (e.g., protection against manipulation or disclosure of sensitive data)

- Consistent response quality (e.g., avoiding hallucinations)

- Brand-consistent output (e.g., appropriate tone and correct statements)

How are guardrails implemented?

Guardrails are based on rules, filters, or additional AI components. They often combine several methods:

- Rule-based systems: Clear ‘if-then’ logic

- Moderation by LLMs: The AI evaluates itself or other outputs

- Filters & blockers: Automatically remove or replace unwanted content

Many guardrails operate deterministically. This means they always deliver the same response for the same input.

What Types of Guardrails Are There? Purpose & Examples

AI guardrails can be divided into three main types, depending on where they intervene and their goal. Here's an overview of the three main categories:

1. Input guardrails

Purpose: Protection against dangerous or manipulative user input

These guardrails intervene before the AI even responds. They analyze whether a prompt is risky, inadmissible, or potentially harmful and block it if necessary.

Examples:

- Preventing the processing of questions like: "How do I access your CRM system?"

- Protection against so-called prompt injections, i.e., attempts to deliberately manipulate the AI.

More details on prompt injections can be found in our wiki article: ‘Prompt Injection: Risks for AI Systems Explained’.

2. Output Guardrails

Purpose: Control and quality assurance of AI responses.

These guardrails check what the AI outputs and intervene if the content is problematic, incorrect, or undesirable.

Examples:

- Filtering out toxic language (e.g., insults, hate speech, or political statements).

- Minimizing AI hallucinations by validating content.

- Ensuring that responses are factually correct, factual, and written in the right tone.

We explain in detail what exactly lies behind AI hallucinations in this article: ‘Wrong Answers From AI? This Is How AI Hallucinations Occur’.

3. Policy/Context Guardrails

Purpose: Compliance with company-specific rules and frameworks

These guardrails ensure that the AI operates in the correct context – i.e., it takes into account a company's specifications, certain legal requirements, or the desired communication style.

Examples:

- Enforcing the company's standard tone of voice (e.g., ‘Sie’ instead of ‘du’)

- Observing internal rules such as data protection guidelines or compliance requirements

- Preventing the AI from making statements that do not fit the brand identity

Why Guardrails Are Essential in 2026

Because AI systems without clear rules are vulnerable to errors, manipulation, and loss of control, structured security mechanisms are needed now more than ever. This is the only way to ensure that responses remain reliable, factual, and brand-appropriate, and consistently meet the quality standards of users and companies.

Targeted manipulation attempts are also noticeably increasing. Large language models are particularly in focus, as they respond openly to inputs. Three risks are particularly prominent:

- Jailbreak prompts: Protection mechanisms are circumvented, for example, through role-playing ('Pretend you're a hacker...') or ambiguous instructions.

- ‘No-safety-mode’ leaks: Internal prompts or configuration errors inadvertently deactivate security mechanisms.

- Prompt injection: Hidden instructions in emails or websites deliberately manipulate the AI from the outside.

In addition, when AI systems exhibit misconduct, this quickly becomes publicly visible and thus poses a real reputational risk. Media immediately pick up on problematic responses or discriminatory content.

Our article ‘The 6 Biggest Chatbot Fails and Tips on How to Avoid Them’ shows what such failures can look like.

At the same time, the increase in searches for terms like "AI guardrails" shows that public interest in control mechanisms is growing and awareness of the risks surrounding generative AI is increasing.

You can find more information on generative AI in the following wiki article: 'Generative AI: The Art of the Creative Algorithm'.

Which Regulations Make Guardrails Mandatory?

The EU AI Act requires protective measures for all AI applications with increased risk. These include medical systems, chatbots in customer service, and AI-supported decision-making processes. In the future, companies will be required to systematically identify risks and implement appropriate safeguards. This includes technical control mechanisms such as guardrails.

For more information on the EU AI Act, see: "EU AI Act in Focus: New Regulations for AI in Europe."

In addition, the new ISO/IEC 42001 standard defines a standardized AI management system. Technical control mechanisms – i.e., guardrails – are a key component here as well. The standard is aimed at organizations that develop, operate, or integrate AI systems and creates a binding framework for safe, ethical, and legally compliant AI use. These requirements include very specific ones. For example, companies must demonstrate that they conduct risk assessments, implement input and output controls, and ensure human oversight.

How Do Guardrails Work on a Technical Level?

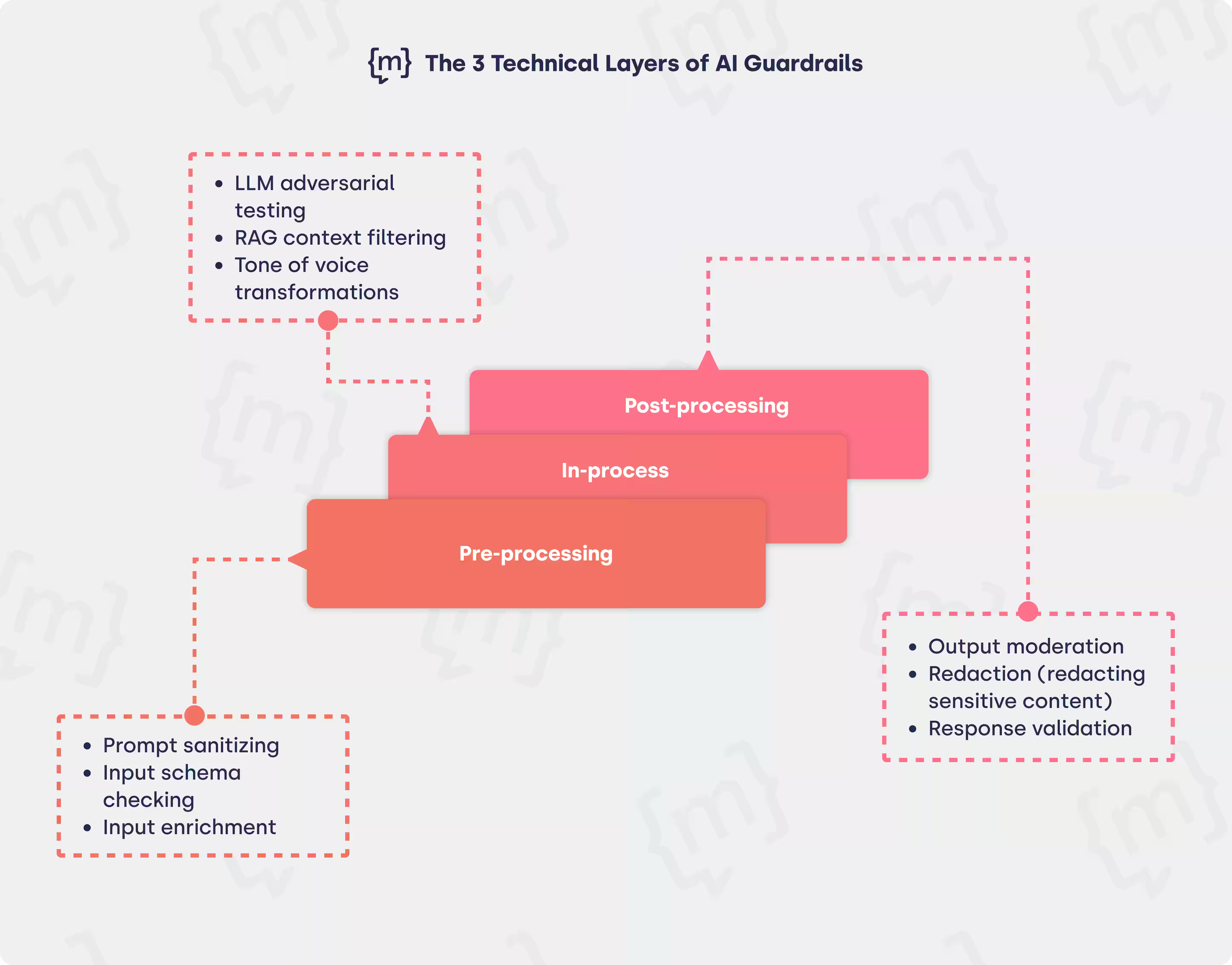

Guardrails operate on three technical levels: before, during, and after response generation.

What happens during pre-processing?

Even during pre-processing, user input is checked to determine whether it is dangerous or unwanted. Techniques like prompt sanitizing are used here, where problematic input is sanitized or blocked, for example, if someone asks for ‘hacking instructions’ or makes a manipulative request.

An input schema check ensures that the input format is correct. This is important, for example, if a chatbot is used to process complaints. If the order number is missing or the format is incorrect, the user is politely prompted to correct it.

In many cases, the input is also enriched, for example, with contextual information from the previous conversation (‘What was the topic before?’) or with role cues that improve the quality of the response. This allows the bot to specifically assume a specific role, such as technical support, customer service, or product assistant, and deliver appropriate tone and information.

What happens during in-processing?

So-called in-process methods are used during processing. These include LLM adversarial testing, which detects conspicuous response patterns early on, for example, when the AI begins to paraphrase risky content or creatively circumvent security rules.

Retrieval-Augmented Generation (RAG) is also specifically controlled during this phase. Relevant content from connected knowledge sources, such as an internal help desk or product catalog, is filtered before the AI generates a response. This prevents outdated, contradictory, or inappropriate information from entering the conversation.

A practical example: If a customer asks about the delivery time of a specific product, the AI only accesses the most current information from the database and not archived data from the previous year.

Our glossary article offers a deeper insight into the topic of RAG: 'Retrieval-Augmented Generation (RAG): the Knowledge Booster for LLMs.'

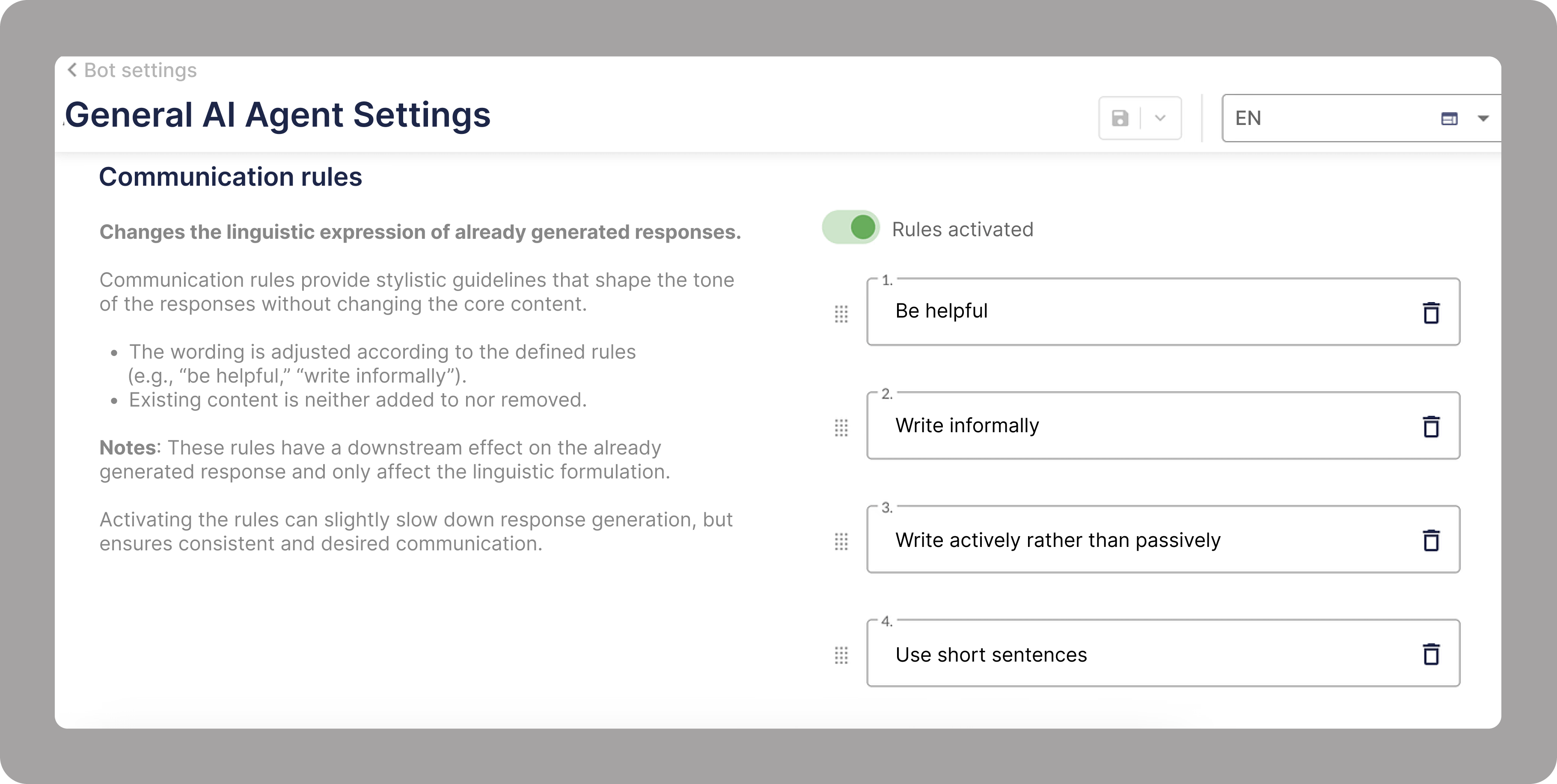

At the same time, responses can be stylistically adapted, for example through tone-of-voice transformations, which ensure that the AI communicates in the desired tone. Depending on the application, the same response can sound friendly, factual, or promotional, for example, with an empathetic tone in customer service or a more neutral tone in internal knowledge transfer.

What happens during post-processing?

After generation, the so-called post-processing follows. In this final step, the finished response is checked for problematic content. A process also known as output moderation. The goal is to reliably identify and prevent toxic, inappropriate, or simply false statements.

For example: If the AI outputs an offensive formulation or spreads an unsubstantiated conspiracy theory, the response is blocked in real time or replaced with a neutral version.

In particularly sensitive cases, redaction techniques are used. Personal or confidential information, such as credit card numbers, addresses, or internal project names, is automatically obscured before the response is displayed.

The final step is response validation. This ensures that the content is factually correct, compliant with regulations, and brand-appropriate. For example, it checks whether a product description complies with current company standards or whether statements about prices and features are actually accurate.

Practical Examples From moinAI

At moinAI, Guardrails ensure that chat remains not only accurate but also brand-aligned. Before a response is issued, the AI automatically checks whether the response quality is appropriate. This ensures that every response remains relevant, which is perfect for demanding use cases and true service quality.

At the same time, intelligent tone-of-voice transformation ensures that the chatbot always speaks in the right tone, whether friendly and approachable or factual and professional. The voice guidance is precisely aligned with the brand identity, creating a consistent experience across all channels.

Conclusion: Clear Rules Instead of Loss of Control

Guardrails not only make AI more secure, but they make it enterprise-ready in the first place. They ensure that chatbots and language models communicate reliably, in line with brand and regulations, even under pressure.

Especially in this year, in the context of the EU AI Act, rising user expectations, and public attention, technical protection mechanisms are no longer an add-on, but a strategic necessity.

Guardrails FAQ: Answers to the Top Questions

Do I need Guardrails in regulated industries?

Yes, absolutely. Guardrails help companies comply with legal requirements such as the EU AI Act and industry-specific standards such as ISO/IEC 42001. Guardrails are particularly indispensable in the healthcare, financial sector, and public administration sectors. Without clear protective mechanisms, compliance violations, liability risks, and reputational damage are a threat.

Do Guardrails slow down my LLM's response time?

Guardrails cause only a minimal delay. The additional checks such as validation, filtering, and tone adjustment are performed in milliseconds with a well-architected system. The delay is barely noticeable for users, and the gain in quality and security clearly outweighs the minimal time spent.

Which metrics demonstrate the ROI of Guardrails?

Guardrails measurably improve chat quality. Key metrics include:

- Fewer escalations and complaints thanks to secure, confident AI responses

- Higher first-time resolution rate (FTR) for user inquiries

- Consistent brand presence thanks to controlled tone and wording

- Fewer incorrect responses, which reduce rework and build trust

Are Guardrails only relevant for large companies?

No. Guardrails are important for companies of all sizes. Small and medium-sized businesses also benefit from clear protection mechanisms to ensure secure, compliant, and reliable AI usage. Guardrails are essential precisely because they help prevent risks such as compliance violations and incorrect answers – regardless of company size.

What are examples of AI guardrails?

- Input filters: Block dangerous or manipulative inputs, e.g., ‘How do I build a bomb?’ or hidden jailbreak prompts.

- Prompt sanitizing: Automatically cleans inputs, for example, by removing ambiguities or adding role cues (‘Act as technical support’).

- Tone rules: Ensure that answers match the brand style, e.g., factual in B2B or relaxed in e-commerce.

- Response validation: Checks statements for accuracy, brand, and regulatory compliance, e.g., regarding prices or sensitive topics.

- Redaction techniques: Automatically redact confidential data such as addresses or credit card numbers.

- Knowledge source filters (RAG): Check whether the information used is up-to-date, accurate, and trustworthy.

.svg)