What is AI slop?

The term AI slop refers to AI-generated, irrelevant, or low-quality content that is spread across the internet and is becoming an increasing problem. The content is created using large language models (LLMs) and includes images, videos, text, and music. The decisive factor for this name is that the content is usually of poor quality and is created en masse with little control or review, and there is hardly any discernible added value.

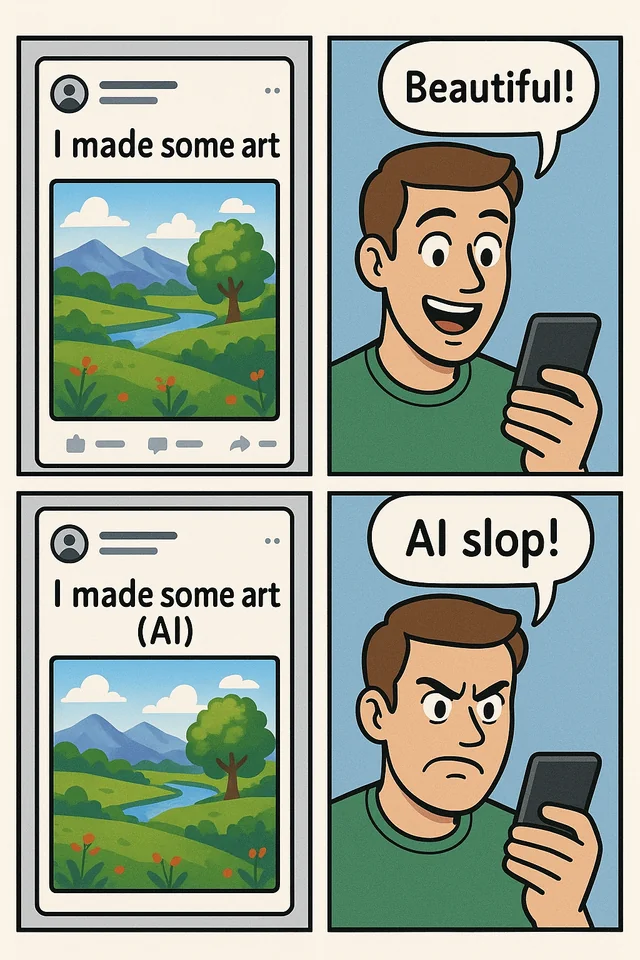

The core purpose of this content is to quickly generate attention and reach – and thus profit. The resulting mass of erroneous or misleading posts lowers the overall content quality of platforms such as TikTok, YouTube, and Meta and leads to a noticeable loss of trust among users. This is because many people find it difficult to identify AI content and differentiate it from articles created by real authors. AI content can be found in all areas and can be created by anyone individually, using AI tools and generative AI. The results usually have similar characteristics. Here is a summary of the most striking AI slop features:

Common Examples of AI Slop

AI slop can be found in all areas online: AI music on streaming platforms, hallucinated sources and “facts” in AI-generated articles, AI-based fake news. Much of the content is very similar, such as generic travel videos with no real reference or AI images that reproduce the same patterns over and over again. Probably the best-known example of AI slop is “Shrimp Jesus,” a surreal AI-generated graphic depicting Jesus formed from shrimp:

Other typical forms of AI slop can be observed in many content categories:

- Fake statements and deepfake clips of politicians or celebrities

- AI-generated fantasy animals and architecture

- Pseudo-educational videos with AI voices

- Generic product reviews or fabricated testimonials

Upon closer inspection, AI slop content does not convey any meaningful information and instead encourages pure consumption.

What is the purpose of AI Slop?

AI slop primarily serves the economic purpose of generating profit, whereby maximum reach and engagement on social media platforms are generated through mass, low-cost production of content. Website operators or bots use AI tools such as ChatGPT or Midjourney to create and distribute content in large quantities. The growing amount of low-quality AI content strengthens the argument of AI critics for far-reaching regulations, which slows down the introduction of high-quality and productive AI applications. The most common motives are summarized here:

- Monetization: Automated posts aim to generate advertising revenue or affiliate links by going viral as much as possible

- Scaling: Search engines and feeds are flooded with irrelevant material to ensure maximum visibility without deploying a lot of personnel

- Manipulation: In some cases, content is also used for fraud, including, for example, emotionally charged fake images for clicks or politically distorted representations

- Prototyping: The low effort involved allows new formats or ideas to be tested en masse.

Without critical reflection and cautious use, there is a risk of misinformation and loss of trust. While the purpose of AI slop is primarily rapid scaling and monetary gain, the real problem arises from how the content is disseminated and algorithmically amplified, influencing digital spaces.

The AI Slop Process

The first step is to set up social media profiles or use profiles that already have reach. This allows generated content to be immediately distributed to a large audience. AI tools are used to quickly create large quantities of visually striking content and distribute it in this way. The more viral or popular the content is with platform users, the better the monetization. This can be done through platform bonuses for viral posts, affiliate links, or the sale of courses and training materials on how to create such content.

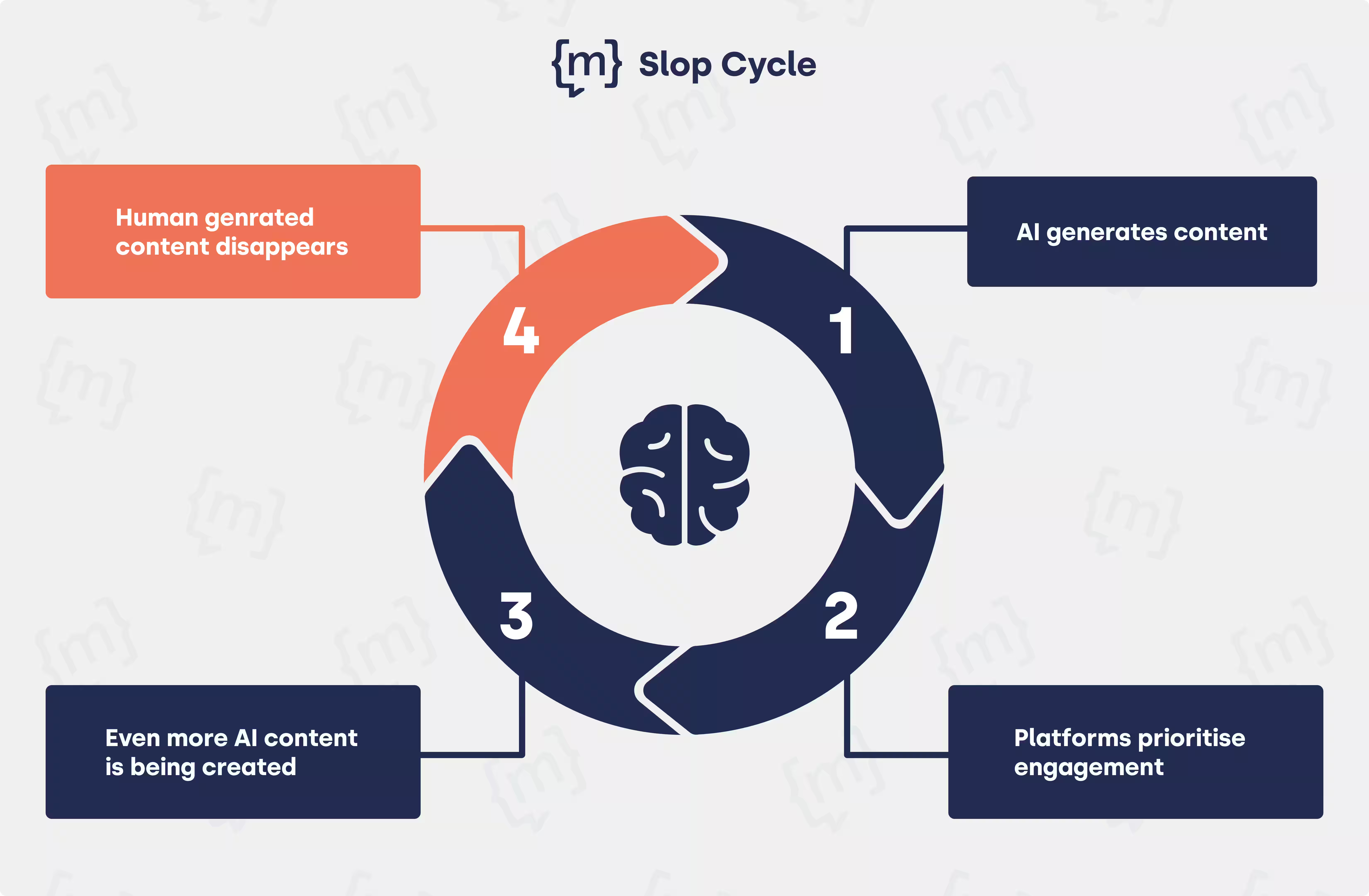

Social media feeds in particular are flooded with AI-generated content that tricks the algorithm through visual plausibility. This process is driven by so-called “agentic AI accounts” (AAA). The AI forensics study “AI Generated Algorithmic Virality” (2025) describes the consequences of these automated social media accounts and their use of generative AI tools to automate content creation and posting in whole or in part. An account is considered “agentic” if the last 10 posts consist exclusively of AI-generated images, even if manually created content was posted previously. With the increase in these accounts, content production is fundamentally changing and platforms are becoming more vulnerable to disinformation and loss of quality. The process of AI slop creates a self-reinforcing cycle: platforms prioritize engagement, slop dominates search results, and displaces human-created, high-quality content.

What are the effects of AI slop?

The most serious effects of AI slop are as follows: Loss of quality and trust:

- Credibility of brands and media is increasingly being undermined

- Disinformation and extremism: Fake news and extreme worldviews are spreading faster and in a more uncontrolled manner

- Cultural loss: Original works are being overshadowed and original, creative content is losing visibility

- Cognitive strain: Attention spans and judgment suffer from constant sensory overload

- Environmental costs: Mass production of automated content increases energy consumption and strains resources.

In addition to the loss of quality, AI slop is increasingly leading to a significant loss of trust among users and brands, as it is becoming increasingly difficult to distinguish between what is true and what is false. The focus on mass and speed in AI-supported content creation obscures what is special, while at the same time original human works are often used to train AI without consent or compensation. Due to the unclear differentiation of AI content, the perception of culture and works in art and music is severely impaired.

Other serious consequences include the misuse of content and the simplified reinforcement of extreme worldviews. Conventional media find it difficult to combat the flood of AI-generated, often false news. Disinformation and fake news are becoming more widespread. In addition, attention spans and human thinking skills are being strained, further limiting the differentiation of human content. Online, users describe the effect of AI slop as “breaking the internet” (Kurzgesagt, 2025), i.e., the internet would be destroyed by the flood of harmful or inferior content. Metaphorically speaking, AI slop is thus a kind of “microplastic of the internet.” (Jochen Dreier, Deutschlandfunk), spreading rapidly and in small pieces and posing underestimated dangers. The flood of trivial content obscures valuable works and hinders social discussions about truth and opinions. Last but not least, AI slop also has ecological consequences due to the high energy consumption involved in mass content generation.

User preferences: An example with Dall-E

A test with Dall-E, OpenAI's image generation tool, revealed interesting insights into user preferences for AI-generated images. Fifty human artworks were described and recreated by the AI (January 2025). Although many participants were usually able to distinguish between the original works and the AI-generated ones, they often found the AI images more appealing and surprisingly preferred them more often. This can be explained by the so-called “bizarre effect,” a psychological finding according to which bizarre and unusual representations in images, videos, or texts appeal more to the human brain. Such unusual stimuli stand out from the crowd and are better remembered, which promotes the attractiveness of AI creations and AI slop. It should be noted that the initially appealing bizarreness effect quickly loses its impact with the mass dissemination of similar AI styles, and the unusual stimuli become less appealing in the long term.

Social platforms as enablers of AI slop

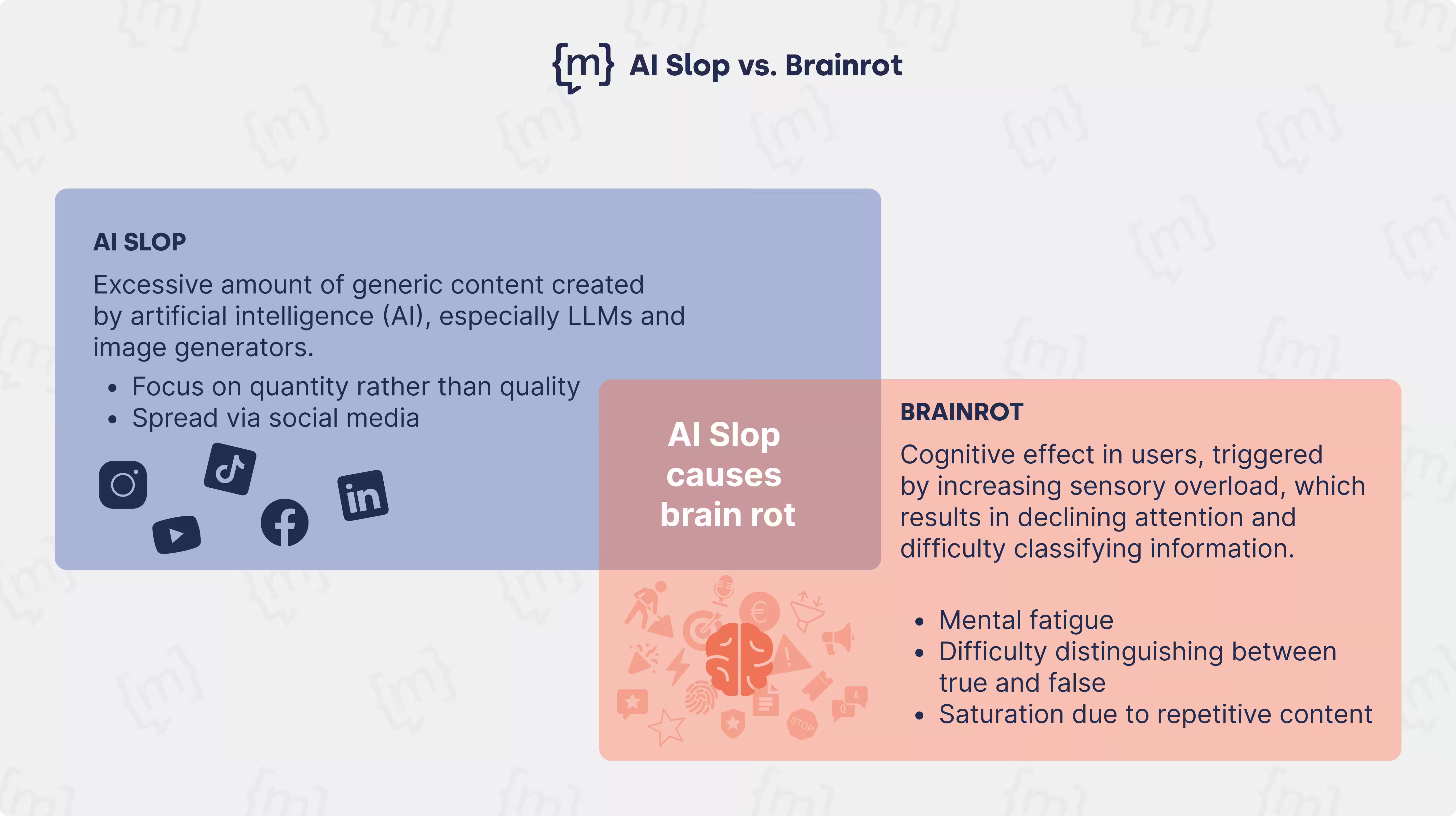

Social platforms are exploiting this trend as enablers of AI slop, i.e., as mediators or promoters, thereby further exacerbating the problem. A sub-term in the context of AI slop is “brain rot,” which refers to the output effect: users consume or produce such excessive low-threshold, repetitive AI content that their perception or communication style noticeably adapts to it, resulting in loss of concentration and psychological stress. (BBC, 2024)

The algorithms behind social networks do everything they can to keep users on the screen for as long as possible. AI slop has a very strong effect here, and content is suggested continuously. Content creation is extremely cheap, but reviewing content is expensive and time-consuming. There is a lack of clear editorial processes to ensure the relevance and quality of content. The more AI slop content is played, the more new content is based on it, creating a loop effect. Meta (Facebook and Instagram) is particularly criticized in this regard. The platform actively promotes AI slop by increasingly suggesting content to users based on AI recommendations. In addition, Meta offers various AI tools of its own for generating AI content.

“Across Facebook, Instagram, and Threads, our AI recommendation systems are delivering higher quality and more relevant content (...) Improvements in our recommendation systems will also become even more leveraged as the volume of AI-created content grows.”

Mark Zuckerberg at the Third-Quarter-2025-Results-Conference-Call, October 2025

Platforms such as Meta, YouTube, and TikTok have continued to introduce monetization programs that reward creators for viral content, thereby massively stimulating AI slop. AI content can be scaled quickly and cheaply, with affiliate programs paying for views and engagement.

What to do: Tips for combating AI slop

Platforms such as Meta and YouTube need to adjust their algorithms to prioritize recognizable added value rather than pure engagement. Attempts to prioritize credibility and editorial review through algorithm adjustments are evident, but still far from sufficient. Measures against AI slop on the user side include technical AI detectors and extensive quality controls when using content. Tools such as GPTZero or Writer.com can be used to identify AI-generated texts and recognize typical AI patterns. Users can control to a certain extent which content is displayed to them by consciously reporting content or marking it as “not interested.” The more real profiles and creators are supported, for example through likes, follows, etc., the less AI slop is played and consumed. The EU AI Act (in force since August 2024) stipulates clear transparency requirements for synthetic content: Generative AI must mark outputs (text, images, audio, video) in a machine-readable format and label deepfakes. Violations are punishable by fines of up to 3% of global turnover. However, the AI Forensics Study (2025) shows a lack of enforcement of labeling. A comprehensive and mandatory labeling infrastructure and audit ecosystems are crucial to curbing AI slop. Conclusion

Conclusion

Just because content can be created in seconds doesn't mean it's right or important. It is therefore becoming increasingly essential for users to critically examine the value of content: Is the post a human-created work or an AI creation? Is it meaningful content with appropriate AI support or generated AI slop with no added value?

Companies in particular should be aware of this difference and only use AI where it can have a real impact. Modern language models (LLMs) enable fast, scalable content production and processing, but only have a qualitative impact if they are precisely controlled and professionally reviewed. If used incorrectly, they increase information overload and make knowledge management more difficult.

[[CTA headline="Use AI effectively and avoid slop with moinAI!" subline="Precise outputs that have a targeted effect in your company: integrate the moinAI chatbot solutions into your company." button="Try it now!" placeholder="https://hub. /chatbot-erstellen“ gtm-category=‘primary’ gtm-label=”Try it now!“ gtm-id=”chatbot_erstellen"]]