Definition: What is ethical AI?

There is no uniform definition of ethical AI, but recognized international guidelines provide guidance. In its recommendation on the ethics of artificial intelligence, UNESCO defines ten basic principles that can serve as guidelines:

Ethical AI follows these principles and ensures that systems operate in accordance with social values and norms. It goes beyond mere compliance with rules and ensures that AI systems work in the interests of users.

The European Commission's High-Level Expert Group on AI defines trustworthy AI by three key criteria: legality, ethical principles and technical robustness. The Fraunhofer Institute offers a scientific in-depth analysis with its approach to understanding and applying trustworthy AI and related concepts. (UNESCO, 2022)

How is trust in AI solutions built?

Trust is based on effectiveness and acceptance.

Ethical AI aims to enable trust. But trust is not a technical feature that can simply be built into a system. It arises through social negotiation between humans and machines. Users must be able to decide for themselves whether they want to trust an AI system. To do so, AI must act in a transparent, respectful and context-sensitive manner. Only then will it be perceived as a reliable partner in customer interaction – and can it unfold its full added value.

Trust is the decisive factor in making AI systems socially effective and economically successful. It is not based on technical perfection, but on successful interactions. Three key elements are crucial here:

- Understanding: Users must feel that the system understands their concerns.

- Transparency: AI decisions must be comprehensible.

- Control options: It must be clear when and how a human can intervene.

Ethical AI creates trust not through technology alone, but through the conscious design of the relationship between humans and machines. Ethics acts as a "relationship architecture": it structures the interaction in such a way that users can make free and informed decisions – and trust can develop as the basis for the success of AI systems. (See also: Chatbot Acceptance: What is the acceptance of a chatbot as a communication channel?)

How does regulation create guidelines – and what shapes ethics beyond that?

Legal requirements such as the GDPR and the EU AI Act set clear guidelines for the use of AI. They also contain ethically relevant requirements: the GDPR, for example, calls for data minimisation, purpose limitation and transparency to ensure the responsible handling of user data. The AI Act classifies AI systems according to risk levels and defines strict requirements for transparency, traceability, oversight and documentation for high-risk applications.

However, regulation does not cover all ethical aspects. Although it addresses key issues such as data minimisation, security and human control, it does not provide a comprehensive, value-based framework. Issues such as fairness, inclusion, sustainability and the common good often remain unregulated.

This is where ethical AI comes in: it complements legal requirements with a conceptual and design perspective. Companies that actively implement ethical standards can set themselves apart in a positive way – for example, through:

- inclusive design that takes all user groups into account,

- clear accountability structures that go beyond minimum requirements,

- open communication about the goals and limitations of AI.

Ethical AI thus becomes a strategic differentiator.

What are the risks associated with using AI in customer interactions?

AI systems – whether chatbots or recommendation systems – harbour specific risks. They can unintentionally discriminate, draw false conclusions or create uncertainty. (See also this article on the Risks posed by AI: a look at the risks of artificial intelligence) Typical risk areas are:

Misinformation: Misguidance with consequences

Incorrect or incomplete information can directly harm customers. A chatbot that provides incorrect product information or answers legal questions incorrectly not only causes frustration – in the worst case, it can lead to wrong decisions with financial or health consequences.

This is particularly critical in sensitive areas such as financial advice or medical information. This shows that transparency and disclosure of sources are not an option, but an obligation.

This article describes how AI hallucinations arise.

Frustrating experiences: when technology becomes a hurdle

Chatbots are supposed to help – but when they don't understand user concerns or get caught up in endless loops, the effect is reversed. The Call Centre Association's (CCV) 2025 Customer Service Barometer shows that short waiting times and the feeling of being taken seriously are crucial for customers. (CCV, 2025) If these expectations are not met, the risk of churn increases – especially in industries with low barriers to switching. Studies such as those conducted by the Qualtrics XM Institute show that over 60% of customers switch providers after negative experiences. (Qualtrics XM Institute, 2024)

A poorly designed chatbot quickly becomes a barrier rather than a bridge. The result: declining customer satisfaction and rising churn rates. It is therefore crucial that systems respond in a context-sensitive manner and seamlessly hand over to human contact persons in complex situations. This is the only way to ensure long-term trust in AI solutions.

Reputational Risks: When AI jeopardises your image

Uncontrolled AI responses – such as discriminatory statements, inconsistent answers or inappropriate content – can go viral within hours. Particularly sensitive: contradictory behaviour that users perceive as arbitrary or manipulative.

Such incidents not only damage customer trust, but often attract media attention and regulatory scrutiny. Once destroyed, a reputation can only be restored with great effort.

Without clear ethical guidelines, the risk of reputational damage, customer loss and internal acceptance problems increases – and with it the danger that AI projects will fail before they can realise their potential.

This article describes "The 6 biggest chatbot fails and tips on how to avoid them".#

Best practices for ethical chatbot implementation

Ethically responsible chatbots do not happen by chance. They are the result of conscious design and are based on clear principles.

Responsibility and traceability are fundamental: every interaction must be documented transparently. This not only serves quality assurance purposes, but also makes it possible to clarify responsibilities in serious cases and continuously improve processes. Only through complete logging can errors be analysed and future interactions optimised.

Transparency and explainability are crucial for user trust. Users must be able to understand how and why the chatbot arrives at certain answers. Honesty is particularly important when there are gaps in knowledge: An ethical chatbot communicates openly when it cannot answer a question reliably – instead of providing uncertain or incorrect information.

Human control remains indispensable. In sensitive or complex situations, a human must always make the final decision. Users must know at all times how they can seamlessly contact a human representative. This prevents frustration and strengthens acceptance of the technology.

Awareness and education form the basis for a reflective approach to AI. Both employees and customers need understandable knowledge about how the system works and its limitations. Only those who understand AI can use it sensibly and exploit its potential. Regular training and clear communication guidelines are essential here.

Checklist: What makes a Chatbot ethical

This article describes the 5 most important tips for a successful chatbot.

The ethical value chain: from principles to profit

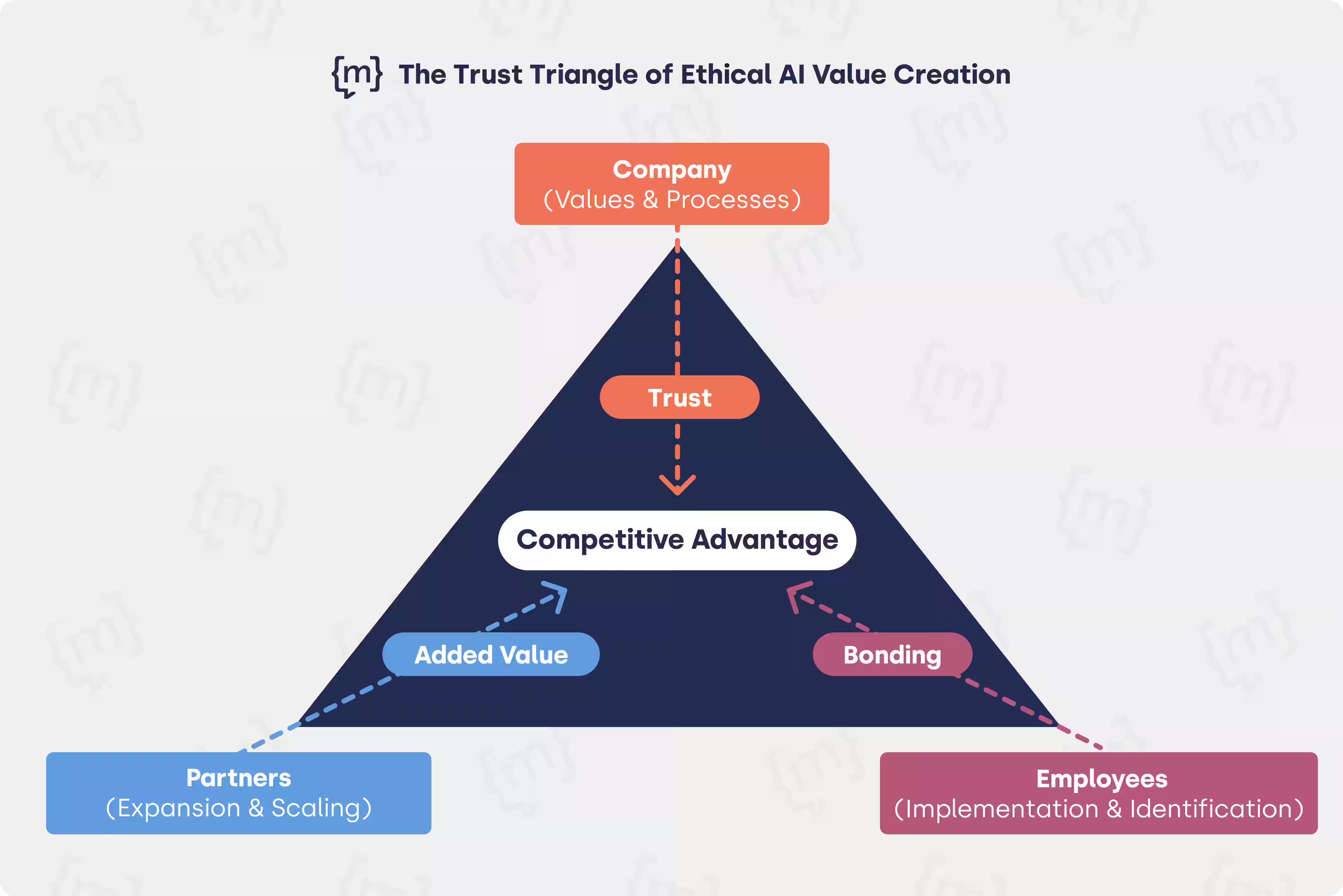

At the beginning of every ethical AI value chain is a stable triangle: companies, employees and partners. These three players must act in harmony to build trust as a common basis. Companies define values and processes, employees implement them in practice, and partners extend the system of impact beyond organisational boundaries.

When these relationships of trust work, loyalty is created. Customers trust the service, employees trust the technology, and partners trust the collaboration. This results in concrete added value – for example, through more efficient processes, higher conversion rates or lower staff turnover. This added value ultimately forms the basis for a real competitive advantage.

From chatbot to AI strategy – a roadmap for organisations

Ethical AI requires a clear roadmap that combines attitude and technology. The following five steps lead to responsible implementation:

1. Identify areas of application and prioritise use cases

Start by determining the areas where AI provides the greatest benefit – whether in customer communication, process efficiency or data analysis. Prioritise use cases according to their potential for added value and feasibility in order to achieve visible success quickly and build acceptance.

2. Systematically assess risks – technical, legal and ethical

Each use case must be reviewed for potential risks. Technically, this involves the stability and security of the solution. Legally, compliance with the GDPR and EU AI Act is crucial. Ethically, the focus is on adherence to principles such as transparency, fairness and user control. This is the only way to build trust in the technology.

3. Define guidelines for development and operation

Clear rules for governance are essential. Who makes decisions about the use of AI? How is data used and protected? How is it ensured that users can understand how AI works? These questions should be set out in binding guidelines.

4. Empower teams through training, tools and support

Employees need not only access to AI tools, but also the knowledge to use them responsibly. Training on AI basics and ethical guidelines is just as important as clear points of contact for questions and regular reflection workshops to exchange experiences.

5. Establish feedback and monitoring processes

Sustainable AI use thrives on continuous improvement. Integrate direct user feedback, for example through surveys or rating functions, and use automated monitoring to keep track of performance KPIs. Regular reviews help to systematically incorporate insights into further development.

Verged's Enterprise AI Operating Model (EAOM) offers a proven structure for this. It integrates governance, platform strategy, organisational anchoring and enablement into a holistic framework. The modular structure with elements such as "4x OPEN" enables scalable and sustainable implementation.

Further information can be found in Verged's AI Governance Playbook.

Conclusion: Ethical AI as an investment in the future

Ethical AI is not an obstacle to progress, but rather its foundation. In the area of customer interaction, the responsible use of AI is becoming a key differentiator. Companies that set clear standards and build trust today not only secure the acceptance of their customers, but also lay the foundation for long-term market share and competitive advantages.

Because one thing is certain: technology alone does not create added value – it is the trust of users that makes AI truly effective.

[[CTA headline="From principle to practice: Responsible AI for your business" subline="Test now, how ethical AI with moinAI can be measurably translated into better customer interaction and more efficient processes." button="Jetzt testen"]]