What Is a Language Model?

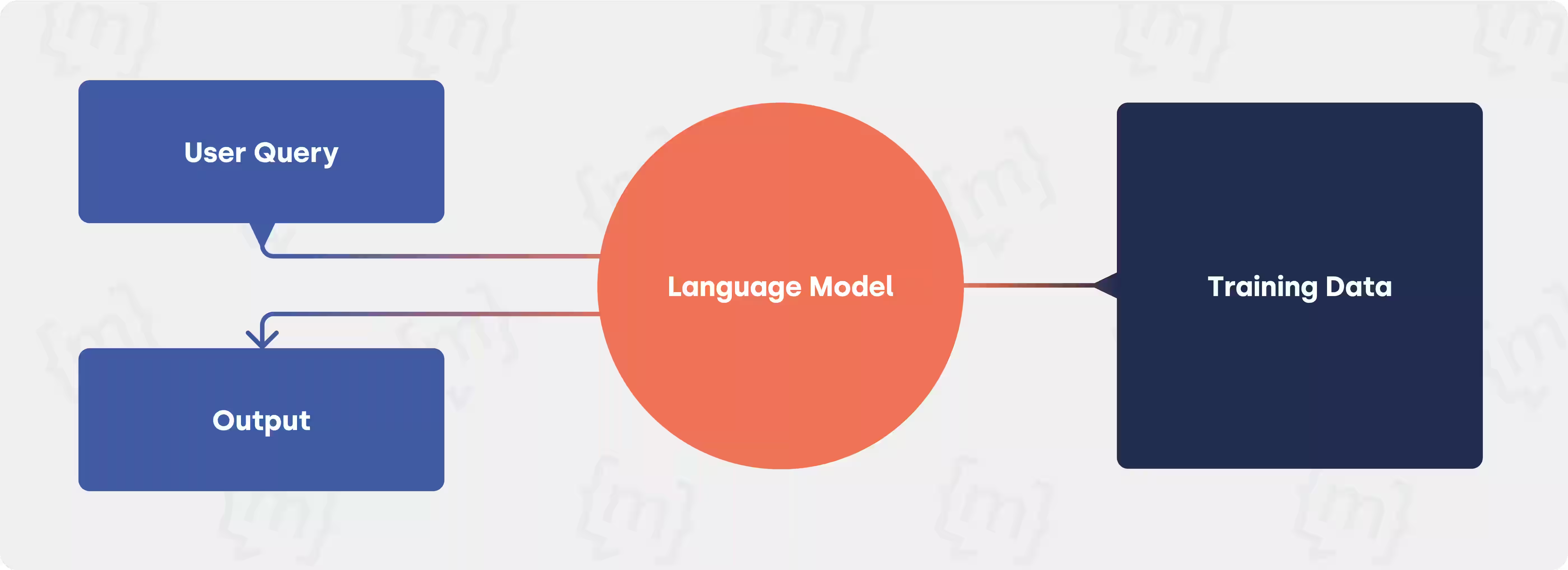

Basically, a language model is a computer program that understands and generates natural language. As an artificial intelligence system, it is based on a statistical model that is trained to recognize patterns in text or speech data and use these patterns to predict future texts or speech data. It forms the basis for numerous applications in the field of natural language processing (NLP), including text generation and chatbots.

Typically, the term language model is used for models that predict how likely it is that a word will follow a part of a sentence. For example, if you have the beginning of a sentence such as “I'm packing my,” the model will hopefully assign a higher probability to the word “suitcase” than to the word “airplane.” Other variants include models such as BERT, which describe the probability of filling arbitrary gaps in sentences.

Modern language models are often neural networks, especially those based on transformer architectures, which can efficiently process large amounts of data. We take a detailed look at large language models in the article The biggest large language models.

Why Are Language Models So Relevant Today? Meaning and Context

Language models are particularly relevant today as they are used in many areas, such as automatic text translation, speech recognition, text generation, social media data analysis, and chatbot development. They enable large amounts of data to be analyzed and interpreted quickly and efficiently, leading to significant advances in many industries.

What Is behind the GPT-3 Language Model?

GPT-4 and GPT-5 are advanced language models released by OpenAI that represent the latest generation of the GPT series. They are based on the Transformer architecture and are capable of performing complex tasks, including text generation, translation, summarization, language comprehension, program code creation, and multimodal applications.

Compared to earlier models such as GPT-3, GPT-4 and GPT-5 are characterized by the following features:

- Larger context windows

- Higher accuracy

- Fewer hallucinations

- Improved abilities in handling complex tasks.

The models are based on a deep neural network architecture and use a method called “unsupervised learning” to learn from huge amounts of text data. They are considered milestones in NLP and AI research and form the basis for numerous applications, including AI chatbots. We have summarized more information about the GPT models and ChatGPT in this article.

Is ChatGPT a Language Model?

Yes, ChatGPT is a language model. More specifically, ChatGPT is a large language model based on OpenAI's GPT-4 architecture (or current versions such as GPT-4o). The model is trained to understand and generate natural language in order to, for example, engage in dialogue with humans or answer questions.

ChatGPT is a typical generative language model (GLM) that has been trained on extensive text data, such as Wikipedia articles, website content, and knowledge databases. Importantly, the model can generate text in different languages and act in a context-sensitive manner. It also supports multimodal input, meaning that the model can process images in addition to text.

Overall, ChatGPT is a significant example of the progress made in the development of language models and their application in various areas, particularly in the field of human interaction and communication.

How Are Language Models and AI Interrelated?

Language models are a central component of artificial intelligence (AI). AI systems can use language models to develop human-like language skills. AI systems based on language models can be used in many areas of application, such as customer service, language teaching, and text generation (ideal for chatbots). These systems can make interactions with users more human-like and effective by processing and responding to natural language in real time.

Language models are a form of machine learning, which in turn is an important component of AI. Machine learning refers to the ability of computers to learn from experience without having to be explicitly programmed. Modern systems often combine language models with external data sources, agent functions, or retrieval-augmented generation (RAG), enabling them to provide dynamic and context-aware responses.

Overall, language models and AI are closely linked: they enable computers to be more human-like and effective in many areas of application. The ongoing development of language models contributes to the continuous improvement of AI systems.

How Do Language Models and NLP Belong Together?

Language models and natural language processing (NLP) are closely related topics, but they are not identical. Language models are central tools within NLP, as they enable natural language to be understood. They can, for example, automatically write texts or conduct dialogues.

NLP, on the other hand, is an interdisciplinary field of research that deals with the processing of natural language by computers. The goal of NLP is to equip machines to recognize, interpret, and generate language. This involves both the automatic recognition of texts and their automatic generation.

Language models are now at the heart of many NLP applications. With advances in large language models (LLMs) such as GPT, Llama, and Gemini, the performance of NLP systems has increased significantly in recent years.

Get to Know Language Models Used in Practice

Now that we've covered the theory, it's time to get practical. At moinAI, we actively use large language models (LLMs) such as OpenAI's GPT-4o to intelligently support our products and services. By using the latest GPT models, our users benefit directly from the latest developments in AI-powered language processing.

Discover what a chatbot prototype could look like for your company and how it can be used in practice.

.svg)